The project started five months ago, shortly before ChatGPT sparked expectation in the tech industry and beyond that we are on the cusp of a wave of AI disruption and somehow closer to the nebulous concept of artificial general intelligence, or AGI. There’s no consensus about the definition of AGI, but the museum calls it the ability to understand or learn any intellectual task that a human can.

Kim says the museum is meant to raise conversations about the destabilizing implications of supposedly intelligent technology. The collection is split across two floors, with more optimistic visions of our AI-infused upstairs, and dystopian ones on the lower level.

Upstairs there’s piano music composed with bacteria, an interactive play on Michelangelo’s “Creation of Adam” from the Sistine Chapel, and soon an installation that uses computer vision from Google to describe people and objects that appear in front of a camera.

Downstairs is art from Matrix: Resurrections (a set designer on the movie, Barbara Munch Cameron, helped plan the museum’s layout), a never-ending AI-generated conversation between Slavoj Žižek and Werner Herzog, and a robotic arm holding a pen that writes notes from the perspective of an AI that views humans as a threat.

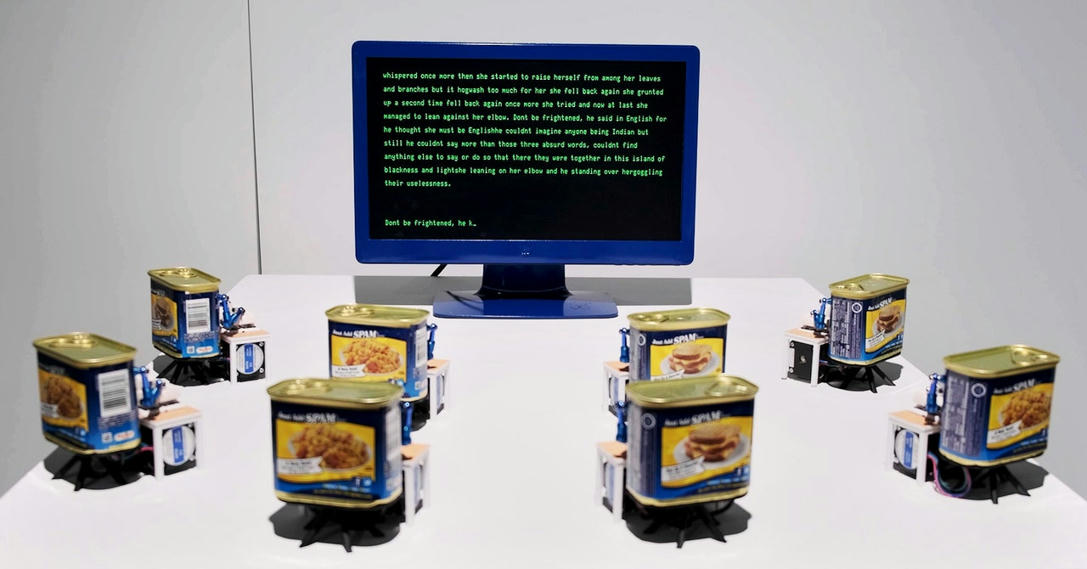

“This is the gates-to-hell selfie spot,” Kim says, pointing out a quote from Dante above the entrance to the lower section of the museum: “Abandon all hope ye who enter here.” The museum is also home to a deepfake of Arnold Schwarzenegger speaking from a script generated by ChatGPT, a statue of two people embracing made from 15,000 paper clips that’s meant to be an allegory about AI safety, and robots flown in from Vienna made from Spam tins with little arms that type.

“There’s so much goodness this kind of tech can engender, so much suffering it can prevent, and then there’s the aspect of how much destruction it can cause and how much can get lost in that process,” says Kim, who was an early employee of Google and has also worked at the United Nations and as an assistant to Paul Graham, cofounder of the Y Combinator startup incubator. “I don’t think we have all the answers. We just need to be very pro-human and give people the critical means to think about this tech.”

The temporary exhibit is funded until May by an anonymous donor, but the space shows the influence of a number of tech founders and thinkers, from a framed tweet about AI and effective altruism by Facebook cofounder Dustin Moskovitz to the bathtub full of pasta near the exhibit entrance. The latter is a reference to the ideas of Holden Karnofsky, a cofounder with Moskovitz of Open Philanthropy, which has funded OpenAI and other work concerned with the future path of AI. Karnofsky has argued that we’re living in what may be the most important century in human history, before the potential arrival of transformational AI he calls Process for Automating Scientific and Technological Advancement, or PASTA.

Attempts to predict the distant future of AI have recently come to center on ChatGPT and related technology, like Microsoft’s new search interface, despite its well-documented limitations and still unclear value. Entrepreneurs betting on the technology are making bombastic claims about what their tech is capable of doing. Last week, OpenAI’s CEO published a blog post about how the company is “Planning for AGI.”

.

Shortly thereafter, the US Federal Trade Commission, an agency that protects against deceptive business practices, warned marketers against making false claims about what their technology is capable of doing. FTC attorney Michael Atleson wrote in his own blog post that “we’re not yet living in the realm of science fiction, where computers can generally make trustworthy predictions of human behavior.”

Many AI researchers believe that, while generative AI systems like ChatGPT can be impressive, the technology is unworthy of being credited with intelligence, because the algorithm only repeats and remixes patterns from its training data. In this view, it’s better to think of the narrow AI of today as more like a calculator or toaster, not a sentient being. Some AI ethicists believe that ascribing human characteristics like sentience to technology can distract from conversations about other forms of harmful automation such as surveillance technology being exported by companies in democratic and authoritarian nations alike.

Kim says she first became familiar with machine learning as an early hire at autonomous-driving startup Cruise, later acquired by General Motors. She began to think about how AI that works properly could eliminate much unnecessary death and suffering—but also that if the technology doesn’t work it could present a huge risk to human lives.

In addition to displaying AI art and art about AI, the Misalignment Museum plans to host screenings of movies that explore the darker potential of the technology, like The Terminator, Ex Machina, Her, and Theater of Thought, a 2022 documentary about neuroscience and AI directed by Herzog.

Lire l’article complet sur : www.wired.com

Leave A Comment